Deriving spectral chords from biosignals

Contents

Deriving spectral chords from biosignals#

This notebook is intended to demonstrate the use of the biotuner (https://github.com/antoineBellemare/biotuner/)

The biotuner toolbox allows to extract harmonic information from biological time series, which in turn informs the computation of musical structures. More specifically, we will show how to derive spectral chords based on the harmonicity of spectral peaks.

Spectral chords from spectral centroid#

The first step to compute spectral chords is to decompose the signal into multiple components, so we will be able to have multiple frequencies associated with a single timepoint. To do so, we use the Empirical Mode Decomposition, which decomposes the signal into a set of Intrinsic Mode Functions (IMF). Then, spectral chords can be computed based on the timepoint consonance levels between each IMF. For each timepoint, the consonance is compute between the spectral centroid of each IMF.

import numpy as np

from biotuner.biotuner_object import compute_biotuner

import time

import warnings

warnings.filterwarnings('ignore', category=DeprecationWarning)

warnings.filterwarnings('ignore', category=RuntimeWarning)

# Load dataset

data = np.load('../data/EEG_example.npy')

# Define frequency bands for peaks_function = 'fixed'

FREQ_BANDS = [[1, 3], [3, 7], [7, 12], [12, 18], [18, 30], [30, 45]]

# Select a single time series

data_ = data[39]

start = time.time()

# Initialize biotuner object

biotuning = compute_biotuner(sf = 1000, peaks_function = 'EMD', precision = 0.5, n_harm = 10)

# Extract spectral peaks

biotuning.peaks_extraction(data_, FREQ_BANDS = FREQ_BANDS, ratios_extension = True, max_freq = 30, n_peaks=5,

graph=False, min_harms=2)

# Compute spectromorphological metric on each IMF

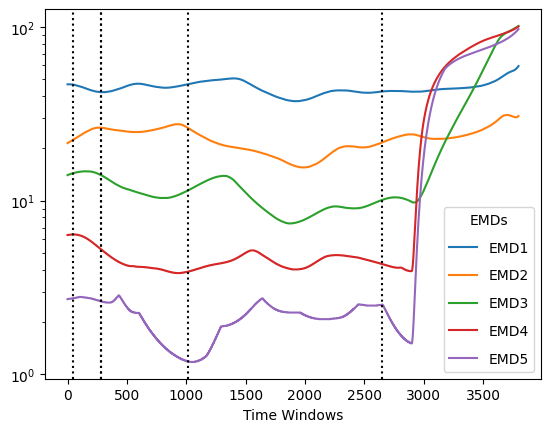

biotuning.compute_spectromorph(comp_chords=True, method='SpectralCentroid', min_notes=4,

cons_limit=0.5, cons_chord_method='cons',

window=1000, overlap=1, graph=True)

<Figure size 640x480 with 0 Axes>

# Instantaneous frequencies of IMFs at moment in time when threshold of consonance is reached

biotuning.spectro_chords

[[6.39, 46.56, 22.37, 2.74],

[14.04, 2.63, 42.1, 26.29],

[14.03, 2.63, 42.1, 26.29],

[46.78, 3.9, 26.19, 1.19],

[21.59, 4.32, 10.08, 2.52]]

Spectral chords from instantaneous frequencies#

You can also compute timepoint consonance between the instantaneous frequencies using the Hilbert-Huang transform. To do so, we need to initialize the biotuner object with a peaks function ‘HH1D_max’, which means that Hilbert-Huang transform will be computed on each IMF, leading to estimations of instantaneous frequencies (IF).

# Choose a time series

data_HH = data[33]

# Initialize biotuner object with Hilbert-Huang method

bt_HH_chords = compute_biotuner(sf = 1000, peaks_function = 'HH1D_max', precision = 0.5, n_harm = 20,

ratios_n_harms = 5, ratios_inc_fit = True, ratios_inc = True) # Initialize biotuner object

# Extract spectral peaks

bt_HH_chords.peaks_extraction(data_HH, ratios_extension = True, max_freq = 60, n_peaks=5,

graph=False, min_harms=2, verbose=False)

# Look at the shape of instantaneous frequencies (n points, nIMFs)

bt_HH_chords.IF.shape

(4000, 5)

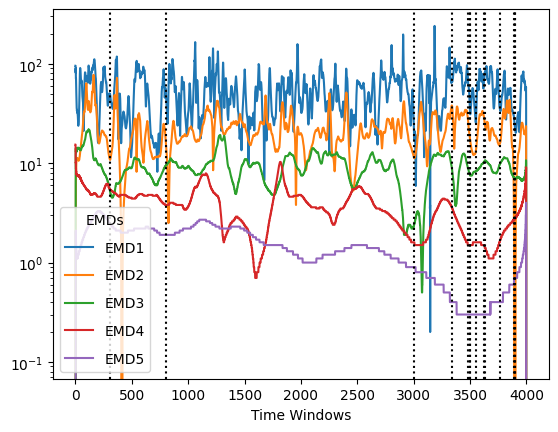

from biotuner.metrics import timepoint_consonance

# Format IF

data_IF = np.moveaxis(bt_HH_chords.IF, 0, 1)

# Compute spectral chords

chords, positions = timepoint_consonance(np.round(data_IF, 1), method='harmsim', limit=50, min_notes=4,

graph=True)

chords

[[2.5, 5.5, 5.5, 11.0, 49.5],

[1.9, 3.8, 9.5, 15.2, 49.3],

[0.9, 1.6, 2.4, 11.2, 16.8],

[0.4, 3.6, 8.8, 37.8, 66.0],

[0.3, 1.5, 8.9, 27.0, 66.0],

[0.3, 1.5, 7.8, 19.2, 42.0],

[0.3, 1.5, 7.5, 16.3, 31.5],

[0.3, 1.6, 8.4, 14.4, 33.0],

[0.3, 1.5, 10.2, 30.6, 60.3],

[0.3, 1.5, 10.5, 26.1, 76.2],

[0.4, 1.6, 9.6, 15.4, 36.8],

[0.7, 2.7, 7.2, 0.1, 27.3],

[0.7, 2.8, 7.0, 22.4],

[0.7, 2.8, 7.0, 21.7]]

from biotuner.biotuner_utils import chords_to_ratios

# Transform the set of frequencies to a set of integer ratios

chords_ratios2, chords_bounded2 = chords_to_ratios(chords, harm_limit=2, spread=True)

chords_ratios2

[[25, 28, 55, 110, 124],

[19, 38, 48, 76, 123],

[9, 16, 24, 28, 42],

[2, 3, 5],

[3, 4, 6, 8, 10],

[3, 4, 5, 6, 7],

[3, 4, 5, 10],

[3, 4, 5, 9, 10],

[3, 4, 6, 10, 19],

[3, 4, 7, 8, 12],

[4, 8, 12, 19, 23],

[1, 2, 3, 4, 9],

[7, 14, 18, 28],

[7, 14, 18, 27]]

# Rescale the set of integer ratios within one octave,

# such as the highest value is less than two time the first value

chords_ratios, chords_bounded = chords_to_ratios(chords, harm_limit=2, spread=False)

chords_ratios

[[25, 28, 31],

[19, 24, 31, 38],

[9, 10, 12, 14, 16],

[4, 5, 6],

[3, 4, 5, 6],

[3, 4, 5, 6],

[3, 4, 5],

[3, 4, 5],

[3, 4, 5],

[3, 4, 6],

[4, 5, 6, 8],

[1, 2],

[7, 9, 14],

[7, 9, 14]]

Listening to spectral chords#

from biotuner.biotuner_utils import listen_chords

listen_chords(chords_ratios[:], mult=40, duration=0.5)

pygame 2.1.2 (SDL 2.0.16, Python 3.10.8)

Hello from the pygame community. https://www.pygame.org/contribute.html

---------------------------------------------------------------------------

error Traceback (most recent call last)

Cell In[7], line 2

1 from biotuner.biotuner_utils import listen_chords

----> 2 listen_chords(chords_ratios[:], mult=40, duration=0.5)

File ~/git/biotuner/biotuner/biotuner_utils.py:1274, in listen_chords(chords, mult, duration)

1272 pygame_lib = pygame

1273 pygame_lib.init()

-> 1274 pygame_lib.mixer.init(frequency=44100, size=-16, channels=1, buffer=512)

1275 for c in chords:

1276 hz = c[0] * mult # The fundamental frequency

error: Audio target 'directsound' not available

listen_chords(chords_bounded[:], mult=300, duration=1)

Exporting spectral chords as MIDI files#

We will now see how to export the spectral chords in MIDI format, keeping the microtonality.

x=1000

if 0 < x < 100:

print('ok')

from biotuner.biotuner_utils import create_midi, rebound

# Define the number of octaves up

n_octaves = 4

mult = 2**n_octaves

new_chords = []

for chord in chords[1:4]:

# Take the original frequencies and rebound them within one octave range

c = [rebound(x, low=np.min(chord)-1, high=np.min(chord)*2, octave=2) for x in chord]

# Then, move the chord n octaves up to be in the hearing range

c = [np.int(x*mult) for x in c]

new_chords.append(c)

# set 1 second duration for each chord

durations = [1] * len(new_chords)

midi_file = create_midi(new_chords, durations, microtonal=True, filename='test')

midi_file

MidiFile(type=1, ticks_per_beat=480, tracks=[

MidiTrack([control_change channel=0 control=81 value=120 time=0]),

MidiTrack([

Message('pitchwheel', channel=0, pitch=4148, time=0),

Message('note_on', channel=0, note=22, velocity=64, time=0),

Message('note_off', channel=0, note=22, velocity=64, time=480),

Message('pitchwheel', channel=0, pitch=2555, time=480),

Message('note_on', channel=0, note=9, velocity=64, time=480),

Message('note_off', channel=0, note=9, velocity=64, time=960),

Message('pitchwheel', channel=0, pitch=5429, time=960),

Message('note_on', channel=0, note=1, velocity=64, time=960),

Message('note_off', channel=0, note=1, velocity=64, time=1440)]),

MidiTrack([

Message('pitchwheel', channel=1, pitch=4148, time=0),

Message('note_on', channel=1, note=22, velocity=64, time=0),

Message('note_off', channel=1, note=22, velocity=64, time=480),

Message('pitchwheel', channel=1, pitch=2866, time=480),

Message('note_on', channel=1, note=19, velocity=64, time=480),

Message('note_off', channel=1, note=19, velocity=64, time=960)]),

MidiTrack([

Message('pitchwheel', channel=2, pitch=4905, time=0),

Message('note_on', channel=2, note=26, velocity=64, time=0),

Message('note_off', channel=2, note=26, velocity=64, time=480),

Message('pitchwheel', channel=2, pitch=4905, time=480),

Message('note_on', channel=2, note=14, velocity=64, time=480),

Message('note_off', channel=2, note=14, velocity=64, time=960)]),

MidiTrack([

Message('pitchwheel', channel=3, pitch=4148, time=0),

Message('note_on', channel=3, note=22, velocity=64, time=0),

Message('note_off', channel=3, note=22, velocity=64, time=480),

Message('pitchwheel', channel=3, pitch=1121, time=480),

Message('note_on', channel=3, note=17, velocity=64, time=480),

Message('note_off', channel=3, note=17, velocity=64, time=960)]),

MidiTrack([

Message('pitchwheel', channel=4, pitch=1, time=0),

Message('note_on', channel=4, note=31, velocity=64, time=0),

Message('note_off', channel=4, note=31, velocity=64, time=480),

Message('pitchwheel', channel=4, pitch=5109, time=480),

Message('note_on', channel=4, note=11, velocity=64, time=480),

Message('note_off', channel=4, note=11, velocity=64, time=960)])

])